NLP for Human-Centric Sciences

Can NLP predict heroin addiction outcomes, uncover suicide risks, or simulate and influence brain activity? Could LLMs one day earn a Nobel Prize for their role in simulating human behavior – and what part do NLP scientists play in crafting this reality? This post explores these questions and more, positioning NLP not just as a technological enabler but as a scientific power-multiplier for advancing human-centric sciences.

In this post, we delve into the evolving relationship between Natural Language Processing (NLP) and the broader realm of behavioral, cognitive, social and brain sciences, presenting NLP not only as a technological tool but as a transformative force multiplier across human-focused sciences. Language, after all, permeates every part of human life, offering insights far beyond data inputs for algorithms. Through examples spanning psychology to medicine, we showcase how NLP can serve as a window into the human mind, facilitating hypothesis generation, validation, human simulation, and more. We highlight its role in predicting rehabilitation outcomes, modeling physiological brain function, and uncovering new risk factors for suicide. Finally, we reflect on the responsibilities of NLP scientists, underlining the challenges of interdisciplinary collaboration and the commitment required to produce meaningful, interpretable scientific insights.

Introduction

2024 marked a groundbreaking year for the relationship between AI and the natural sciences, with two Nobel Prizes awarded for contributions powered by, or deeply tied to, AI- specifically, deep learning. John J. Hopfield and Geoffrey E. Hinton received the Nobel Prize in Physics for foundational advancements in artificial neural networks, such as the Hopfield network, capable of storing and reconstructing patterns, and the Boltzmann machine, a generative model that classifies images and generates new examples based on training data. In Chemistry, Demis Hassabis, John Jumper, and David Baker were recognized for their work on AlphaFold, a deep learning system that accurately predicts protein structures, a long-standing challenge in molecular biology.

AI, particularly deep learning, is indisputably transformative, impacting technology, society, and now, as these Nobel Prizes affirm, advancing research on natural sciences.

Yet two questions arise: First, has deep learning achieved similar breakthroughs in the human-centered sciences, such as psychology, psychiatry, sociology, or behavioral economics? Second, have any major scientific achievements- whether in exact or human sciences- stemmed from NLP? Put simply, if deep learning has succeeded in modeling physical phenomena, why does it struggle to capture human-centered scientific phenomena? And why hasn’t NLP, which relies on deep learning, demonstrated similar success?

We believe the reason is twofold: the subjective, hard-to-define nature of human-centric sciences, and the endless nuances inherent in language. Modeling scientific problems that lack clear-cut definitions, using a tool as flexible as language – changing across communities, generations, genders, and cognitive states – is an exceptionally complex endeavor. Additionally, for some human-centric problems, language alone may not suffice as input. Despite these challenges, as we will discuss, modeling science through language remains essential, as it provides a unique window into human cognition and behavior.

NLP is already applied across numerous intersections of language and natural or human-centered sciences, from analyzing clinical records

Science and NLP

Merriam-Webster defines Science as:

A system of knowledge focused on general truths and laws, tested through empirical methods- identifying problems, gathering data through observation and experimentation, and testing hypotheses.

Scientific disciplines are often divided into several major groups:

Formal sciences (logic, mathematics, etc), Natural sciences (physics, chemistry, biology, etc), Social sciences and Humanities (sociology, psychology, etc), and Applied sciences (medicine, computer science, etc).

Where does NLP fit into this classification? The field of NLP emerged from the fusion of two different-yet-similar scientific fields: linguistics (which are a part of Social sciences and Humanities) and computer science, which belongs to applied sciences. Originally, NLP was driven by two key motivations:

(1) automating language understanding and (2) deciphering the structure of language.

Early efforts centered on parsing language for practical applications, such as translation and question-answering, relying on grammar-based, often rule-driven systems. The introduction of statistical methods, like Hidden Markov Models (HMMs) and probabilistic parsing, marked a shift toward data-driven models capable of learning from vast text corpora and handling larger contexts, enabling advanced tasks like sentiment analysis and text generation. Today, with abundant data and advanced computational resources, NLP is largely dominated by deep learning and large-scale models like Large Language Models (LLM), capable of tackling incredibly complex, context-sensitive tasks.

The remarkable capabilities of LLMs are one of the two main reasons we believe that now is the ideal moment to usher in a new era, in which NLP serves as a power multiplier for human-centric sciences. Although originally developed with the relatively straightforward goal of predicting the next word, LLMs have, as a byproduct, evolved to present human-like behaviors, simulating interactions to a degree that sometimes even confounds us

Apart from the rise of LLMs, we believe another factor may encourage researchers to view NLP as a power-multiplier in human-centric sciences: a fresh perspective on language itself. Traditionally, we see language as a sequence of words- a straightforward conduit of information or a simple data source for analysis. In this next section, we propose a different view: language as more than data for algorithms, but rather as a window into the human mind.

Language as a Window Into the Mind

Language is the natural way in which humans express their complex collection of thoughts, knowledge, emotions, intentions, and experiences – i.e it is how humans process, perceive and interact with the world. The power of language (and NLP as a means of exploring it) for scientific exploration lies in its unique blend of structure (its “mathematical” nature) and depth of human nuances

The Individual Level

Through language, we communicate our ideas, reveal beliefs, and express emotions, sharing our subjective reality. Word choice and linguistic nuances provide insights into cognitive and emotional states, making language a powerful tool for understanding human consciousness and behavior. Accordingly, studying language touches the core of what it means to be human, which is why it is central to fields like psychology, cognitive science, and neuroscience – disciplines dedicated to exploring the complexities of the individual’s mind.

In psychology, for example, language mirrors the intricacies of the human psyche, revealing mental and emotional states, and therapeutic conversations rely on verbal expression, where word choices and narrative structures can uncover underlying thoughts and behaviors. Persistent negative language may indicate depression

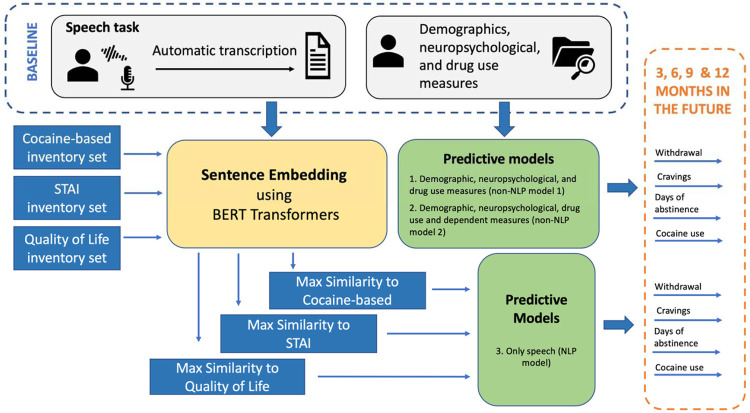

One example of assessing treatment progression is presented in a pioneering study by

The Collective Level

While the first view of language focuses on the individual mind – exploring personal cognition, emotion, and experience – this second perspective shifts to language as a model of communication within groups and societies. This approach allows us to analyze how language shapes and reflects social interactions and cultural norms, providing insights into collective behavior across fields like social science, political science, economics, and the humanities.

In social science, language patterns provide insights into social behaviors, group dynamics, and societal trends

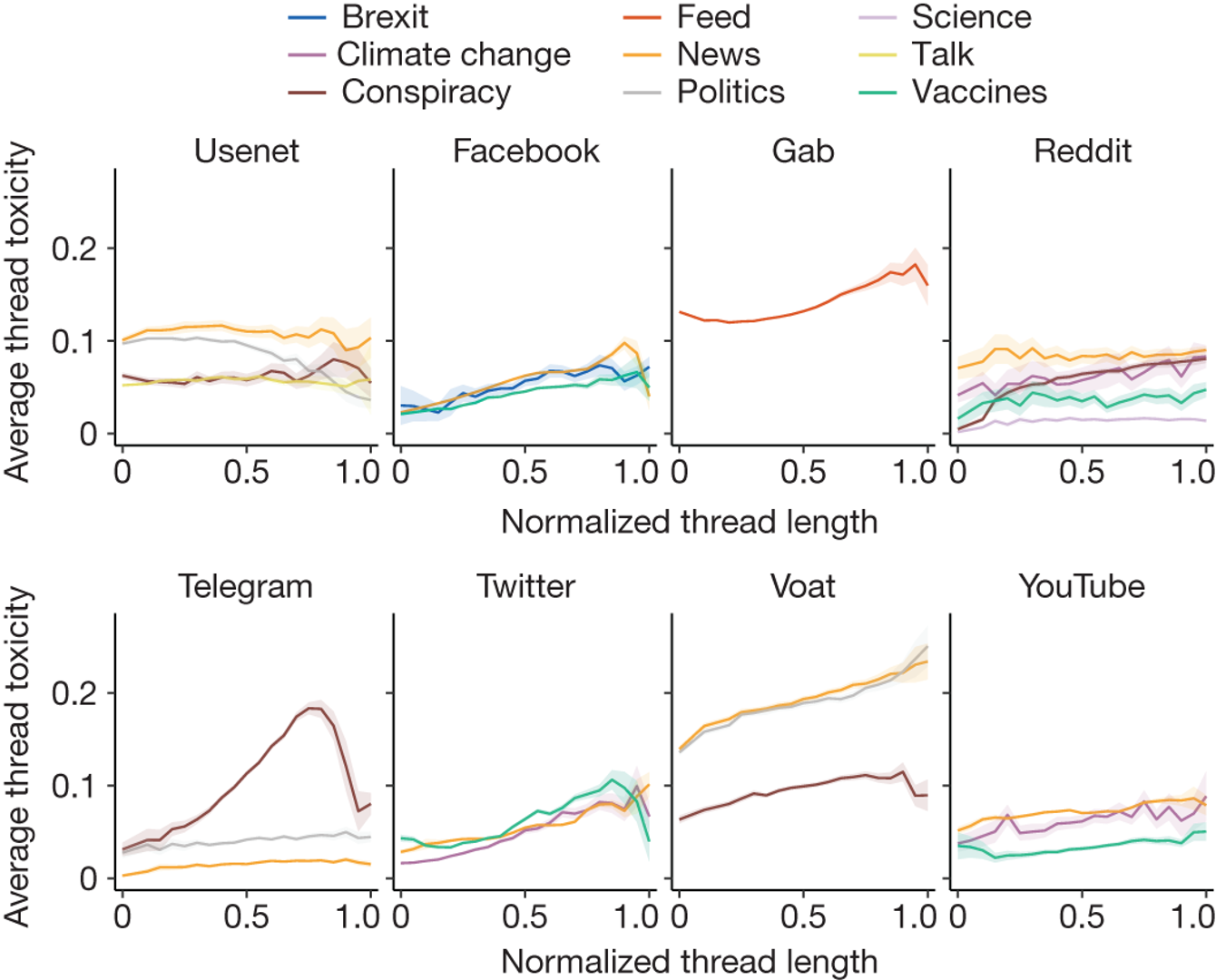

Indeed, computational social science has emerged as one of the most established subfields of NLP. For example, in a study published in Nature

The authors found that, while longer conversations often exhibit higher levels of toxicity, inflammatory language doesn’t always deter participation, nor does hostility necessarily escalate as discussions progress. Their analysis suggests that debates and contrasting viewpoints among users significantly contribute to more intense and contentious interactions. This study exemplifies how analyzing the language of the collective can uncover nuanced dynamics in online interactions, revealing patterns that shape digital discourse.

Applying NLP in Scientific Quests

In the previous section, we proposed a new way to frame language – as a window into the human mind, on an individual and collective level – and provided several examples that put this perspective into practice, driving remarkable innovation across various scientific fields.

In this section, we turn our attention to practicality. For NLP practitioners seeking to utilize NLP as a tool for scientific inquiry, we present several practical approaches- along with examples- that showcase how NLP can creatively help drive scientific discovery and reveal new insights about our world. In particular, we will describe how NLP can:

- Generate scientific hypothesis.

- Validate existing theories.

- Empower simulations of human interaction, behavior, or the brain.

- Extract applicable insights from scientific corpora.

Hypothesis Generation (Bottom-Up)

As previously mentioned, NLP methods are often applied to large text datasets, where they excel at learning prediction models to forecast specific phenomena. While this capability is undoubtedly impactful, we argue that predictions alone – based on patterns found in data – do not always help us scientifically model and understand the reality around us. A more effective approach might be to start with a hypothesis, following classic scientific principles. But what if we’re uncertain about where to begin? In this case, we propose using NLP models to generate novel hypotheses – a ‘proposed explanation for a phenomenon.’

Typically, scientists generate hypotheses through a top-down approach, drawing from established theories or prior knowledge to guide their inquiries. While this approach leverages existing understanding, it may sometimes miss unexpected patterns within the data. By contrast, NLP models, especially those based on deep learning, function as powerful bottom-up tools – a strong predictive model can serve as a generator of insights. Through interpretable methods that clarify the basis of model predictions or reveal what the model encodes in its representations, we can transform predictive power into scientific discovery.

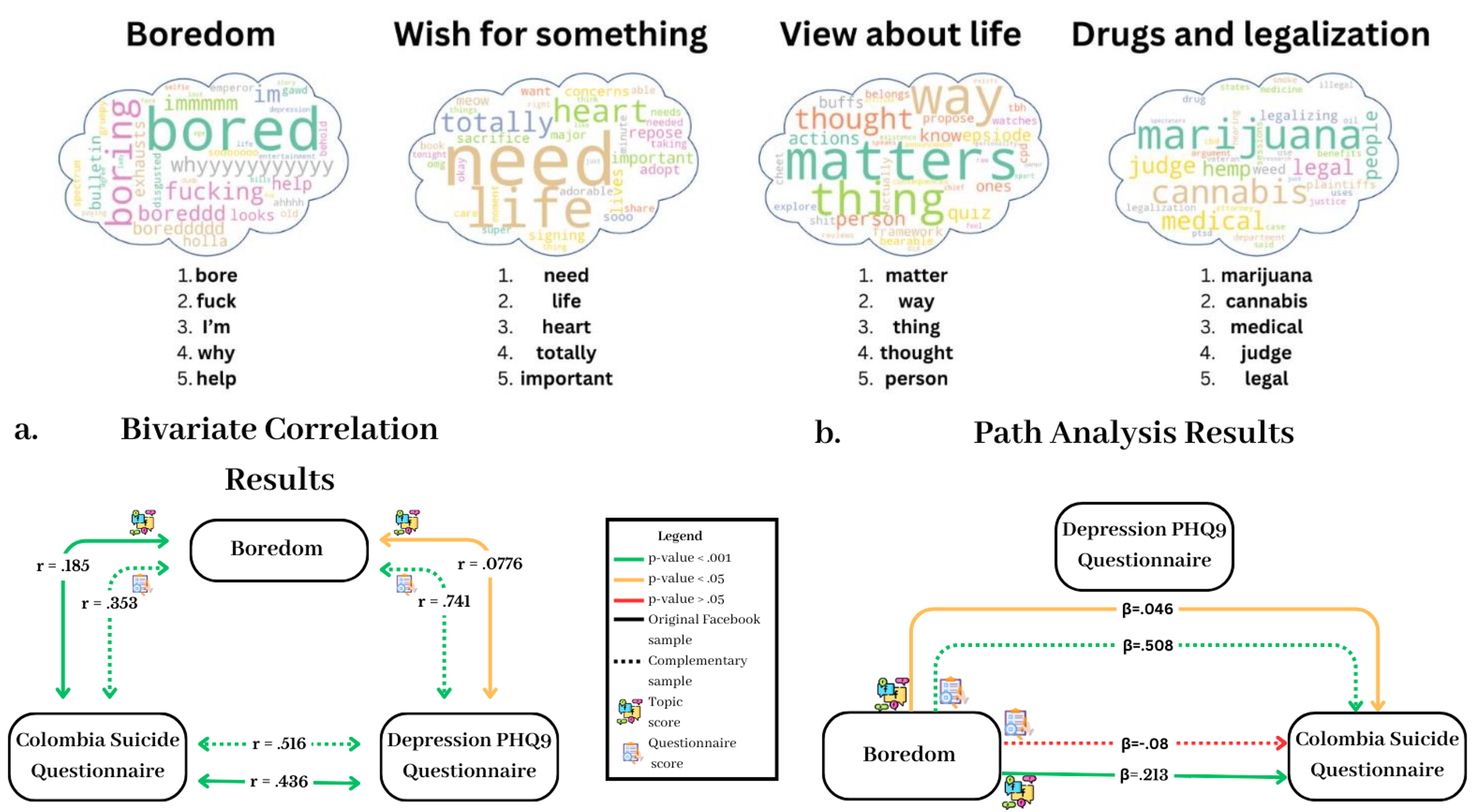

One example of this approach is presented in

Theory Validation (Top-Down)

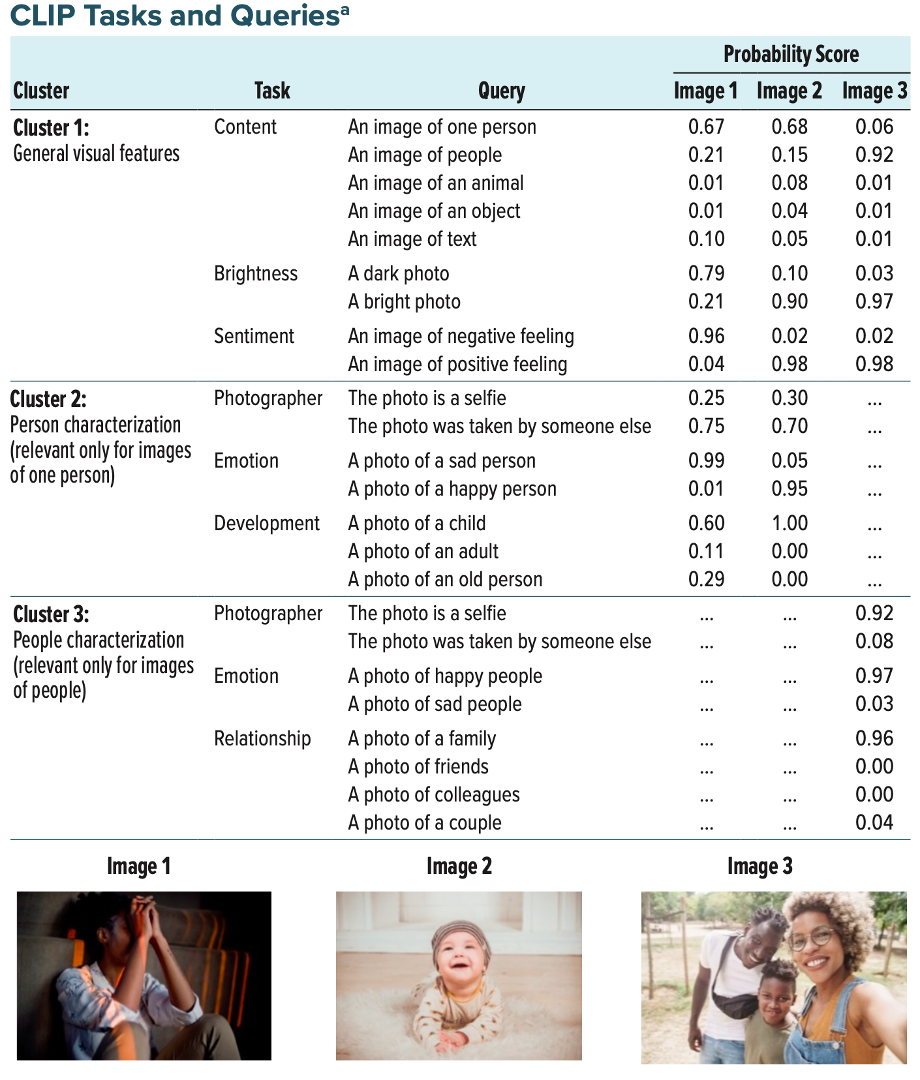

In cases where a scientific hypothesis already exists, NLP models can be leveraged to validate and strengthen it. In a separate study on suicide

Guided by domain experts, the researchers aimed to translate two psychiatric theories – the Interpersonal Theory

As this work demonstrates, incorporating NLP with other modalities - such as images - can significantly enhance the scientific insights “NLP for science” offers. But what if we took this potential further by embracing ‘extreme multi-modality’? It’s entirely possible to envision models that incorporate not only text, images, and videos, but also brain scans, psychological biomarkers like blood tests, data from smartwatches, and more. Such a cohesive, NLP-centered multi-modal approach could truly transform scientific research. However, achieving this potential requires the NLP community to actively develop suitable architectures and models. “NLP for science” entails more than merely applying NLP to scientific problems; it calls for foundational models designed with a multi-modal, text-centric focus – a gap yet to be addressed.

Simulating Human Behavior

Simulating and replicating human behavior with LLMs offers scientists a fast and affordable way to gain insights into social and economic scenarios. By generating human-like responses, LLMs can enable researchers to pilot studies, test experimental variations, examine different stimuli, and simulate demographics that are difficult to reach, thereby uncovering potential biases or clarity issues in study design. These models streamline the experimental process, allowing for the rapid testing of multiple approaches and helping identify the most effective methods, thus reducing the need for resource-intensive trials with human participants.

NLP models, particularly LLMs, have recently shown potential as tools to track and emulate human behavior. This has been validated by multiple studies, including

In another example focused on game theory,

Simulating the Human Brain

With language serving as a direct conduit into the biological mechanisms of the brain, NLP possesses the remarkable capability to model aspects of the human brain itself. Researchers approach this by measuring brain activity using techniques like functional Magnetic Resonance Imaging (fMRI) and Electroencephalography (EEG), then aligning the internal representations of NLP models, such as GPT-2 or large LLMs like GPT-4

This research holds promise for the future, enabling simulations with NLP models instead of humans and overcoming traditional neuroscience limitations, such as invasive procedures, high costs, and limited access to specialized equipment. Additionally, this line of research could lead to developing methods of decoding neural signals into speech

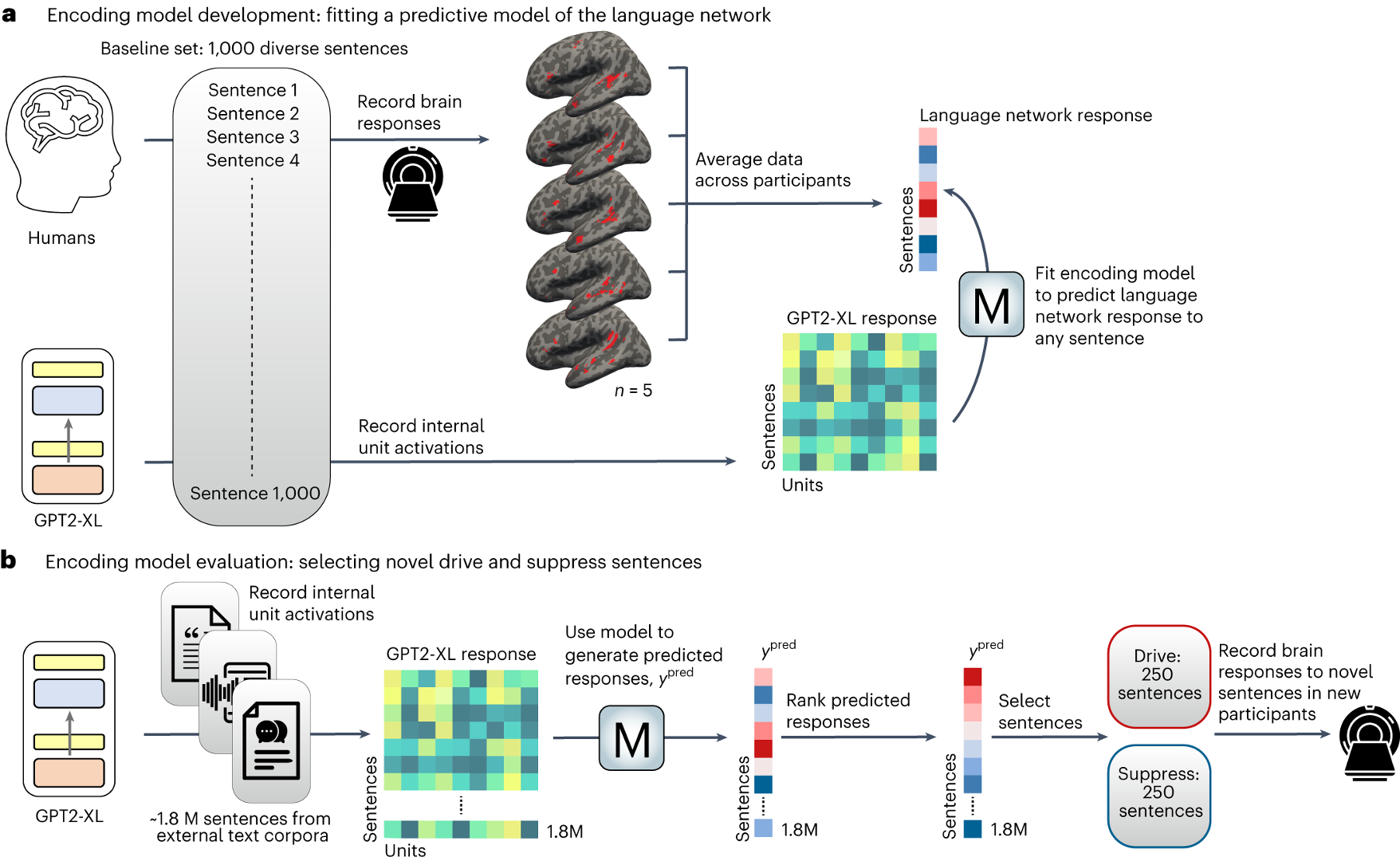

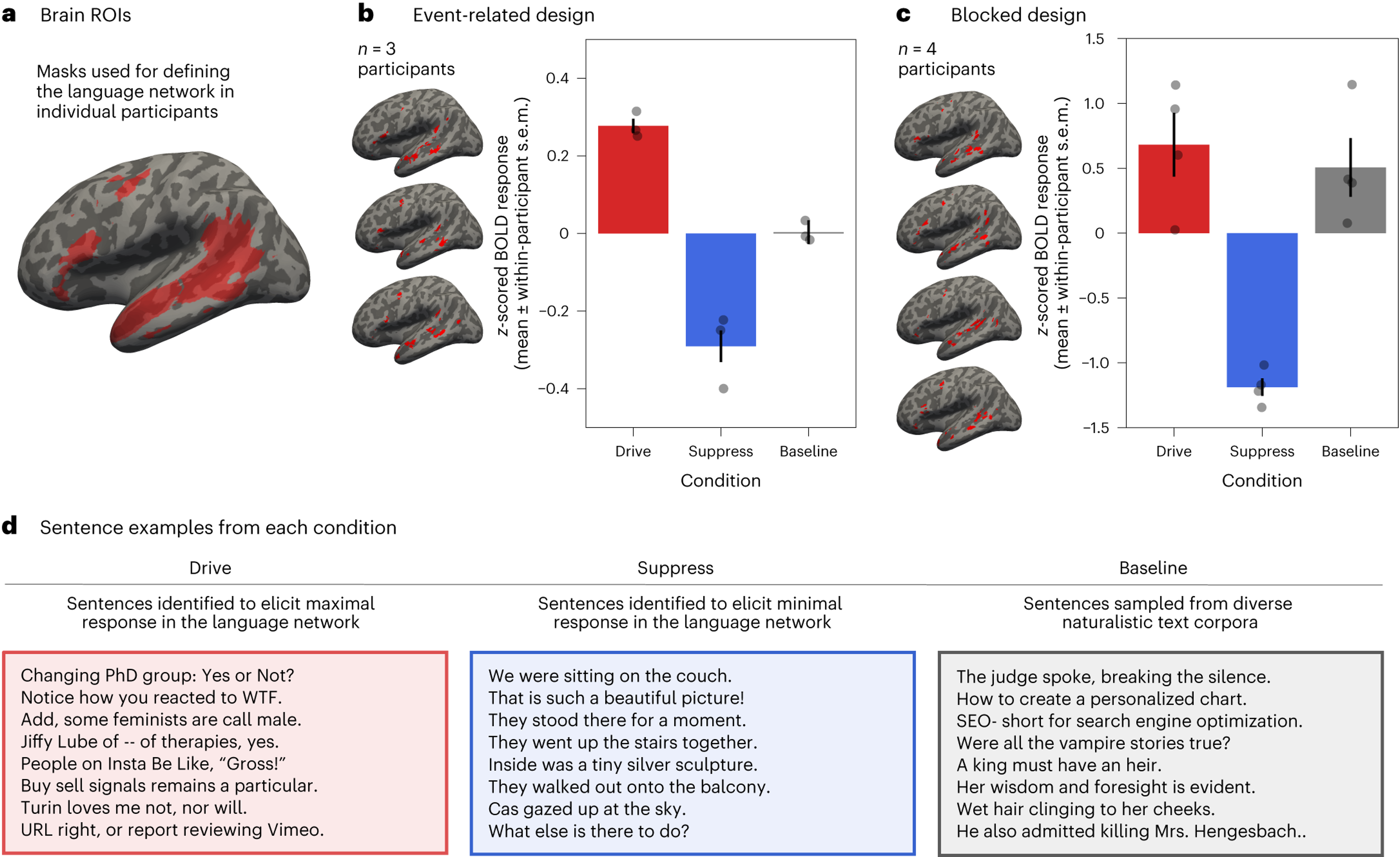

One example for applying NLP for brain simulation is presented in

The researchers recruited five participants who underwent functional MRI scans while reading 1,000 diverse sentences, measuring brain activity in their language network. In the first stage, they aligned and trained an encoding model using GPT2-XL, by extracting sentence embeddings. These embeddings were used in a ridge regression model to predict the average blood-oxygen-level-dependent (BOLD) response in the brain language network. The model demonstrated a prediction performance of r=0.38, indicating an undisputed correlation between the model’s predictions and actual brain responses.

In the second stage, the researchers sought to determine if the model could identify whether new sentences would drive or suppress activity in the listener’s language network (i.e, would the new sentence make their brain activity increase or decrease). They approached this by searching through approximately 1.8 million sentences from large text corpora, using their model (GPT-XL + regression) to predict which sentences would elicit maximal or minimal brain responses. These model-selected sentences were then presented to new human participants in a held-out test, where brain responses were again measured using fMRI. The findings revealed that the sentences they predicted to drive neural activity- indeed elicited significantly higher responses, while those predicted to suppress activity led to lower responses in the language network. In other words, they could predict brain activity using NLP.

The researchers found that sentences driving neural activity were often surprising, with moderate levels of grammaticality and plausibility, while activity-suppressing sentences were more predictable and easier to visualize. This indicates that less predictable sentences generally elicited stronger responses in the language network – a phenomenon interestingly captured by the language modeling task. Such findings have implications not only for understanding language processing in the brain but also for showcasing the potential of LLMs to non-invasively influence neural activity in higher-level cortical areas.

Extracting Applicable Insights from Corpora

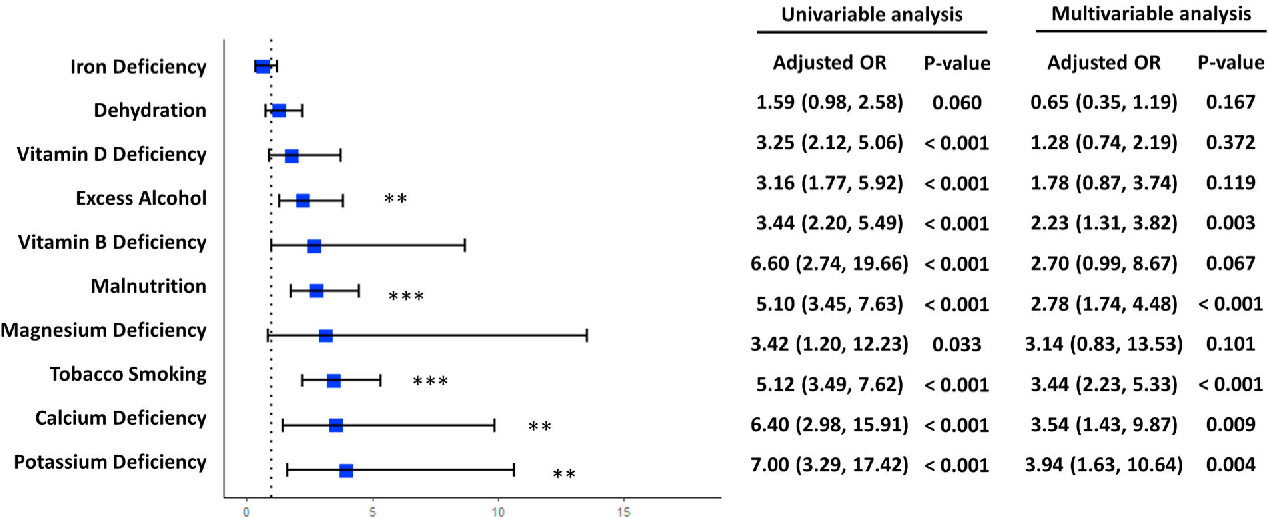

Finally, NLP can fulfill one of its original purposes – extracting insights from large corpora, in this case, scientific texts. While this strength of NLP can be applied across various scientific domains, here we focus on its extraction capabilities within human-centric sciences. For example,

Their analysis confirmed the prevalence of well-known risk factors, such as tobacco use and malnutrition, in over 50% of Alzheimer’s cases. However, it also called certain popular medical hypotheses into question. Despite extensive research linking cardiovascular risk factors – such as high-fat and high-calorie diets – to Alzheimer’s disease (1),

The work of

Importantly, to ensure the robustness of their findings,

In the next section, we define the unique aspects of this role and examine the responsibilities and challenges it entails.

Responsibility of the NLP Scientist

The role of NLP scientists goes far beyond choosing the most powerful model, writing code, and reporting results. To make a genuine contribution to scientific inquiry, they must thoroughly understand the problem, its objectives, and the limitations of the data- grasping the entire scope in order to make informed modeling choices. This may even require developing novel NLP methods tailored to the specific task or its evaluation. In short, an NLP scientist must keep three essential elements in mind: how they model the problem (especially with an emphasis on interpretability); how to evaluate their results in a rigorous manner; and who they collaborate with in order to strengthen and validate their research.

Interpretability-Aware Modeling

The NLP scientist must understand that their primary goal is often – perhaps counterintuitively – not to achieve the highest model performance, but to gain interpretable scientific insights, even if this means sacrificing a few points in performance scores. They should critically examine the model’s parameters and behaviors to understand why it predicts as it does, what patterns it captures from the data, and how these insights relate to the phenomena under study..

In this context, NLP interpretability serves a dual purpose. The first is common in NLP research: improving model performance through interpretability – debugging, identifying failure points, and refining the model. The second purpose, unique to “NLP for science”, aims to extract scientific insights. By using interpretable methods to clarify model predictions and uncover what the model encodes, we can transform predictive power into meaningful scientific knowledge.

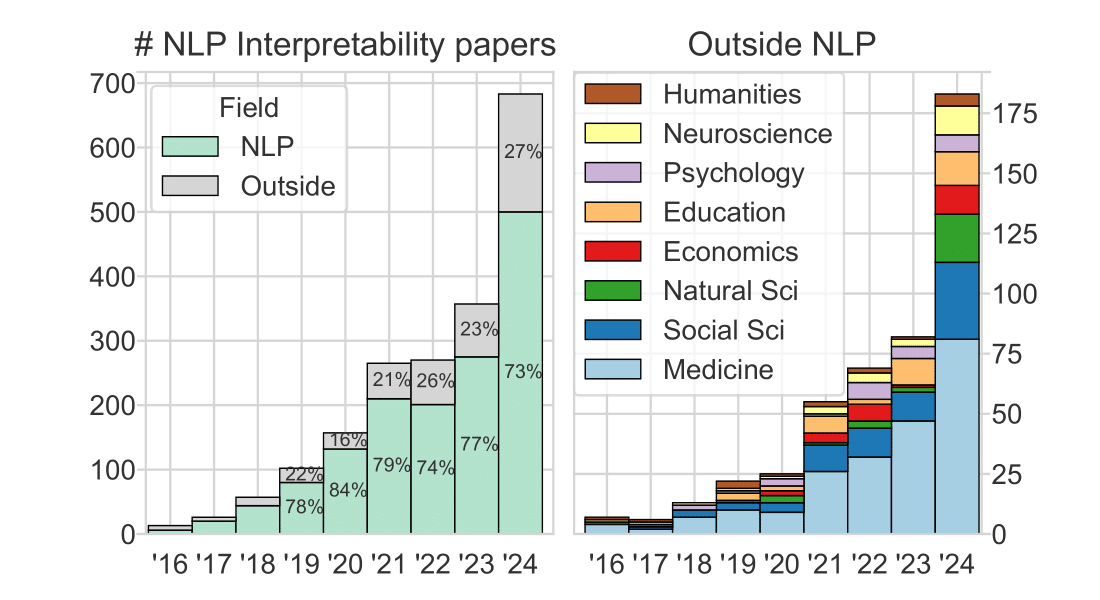

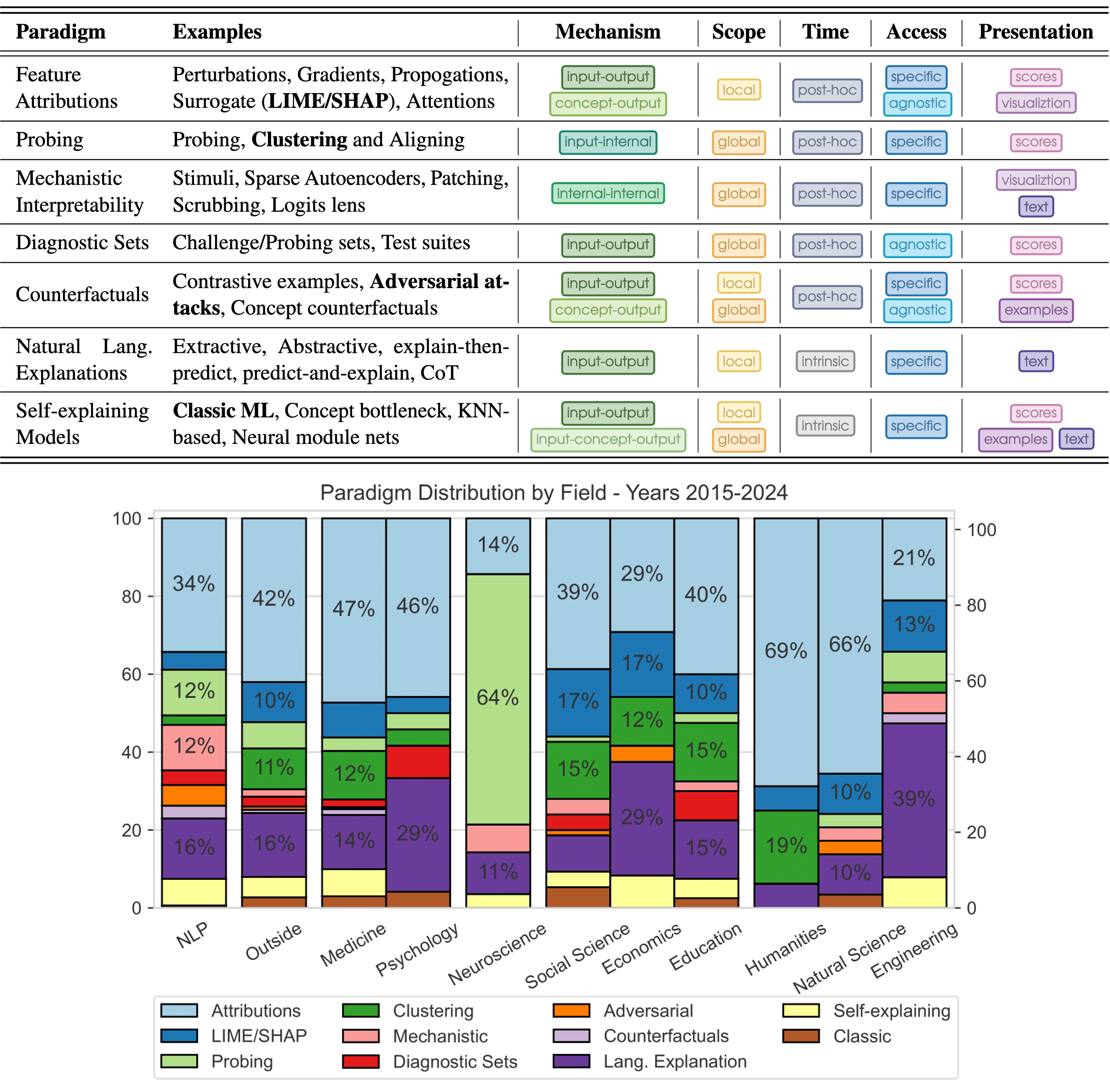

According to

In addition to designing models with interpretability in mind, NLP scientists must focus on understanding the underlying reasons for specific predictions rather than merely identifying correlations. Prioritizing techniques that distinguish between correlation and causation is essential for reporting sound conclusions, i.e., ones that accurately reflect a model’s decision-making process, also known as “faithful explanations”

Meeting Scientific Standards

NLP scientists must recognize that they are in fact, conducting scientific research. Still, certain standards are often overlooked in AI literature. For instance,

Currently, in the NLP literature, if a model’s reported performance cannot be replicated or lacks robustness in different domains, the repercussions are minimal – practitioners and researchers may simply choose not to use it. However, in scientific fields, unsound conclusions can have harsh consequences. Therefore, one of the crucial roles of NLP scientists is to develop methods that ensure the soundness of their findings. Indeed, there is an established line of work in NLP that focuses on developing causal methods

The data used for NLP algorithms should also comply with scientific standards. In NLP research on linguistic risk factors for suicidal tendencies, for instance, data sources and annotation methods can vary significantly. Many NLP researchers might use Reddit posts labeled by the subreddit (e.g., posts from r/SuicideWatch vs posts from r/general) or crowd-sourced annotations (e.g., taking posts from those subreddits and manually annotating them as positive if the post indicates its author is in suicide risk). However, data with stronger scientific credibility might include posts from individuals who completed a standardized suicidal ideation questionnaire or, ideally, from those who have actually attempted suicide. Such choices profoundly impact the validity and applicability of the findings, particularly when trying to publish in non-CS journals.

Then, there’s the widely adopted approach of LLMs-based annotations. NLP scientists should remember that even the strongest of LLMs could introduce measurement errors – a mismatch between the true gold label and the LLM annotation – which can bias estimators and invalidate confidence intervals in downstream analyses. This can originate, for example, from inherent biases within the LLM (such as gender, racial, or social biases) and sensitivity to variations in prompts or setups. Accordingly, the role of NLP scientists is to propose robust statistical estimators for parameters of interest (such as political bias) using LLMs.

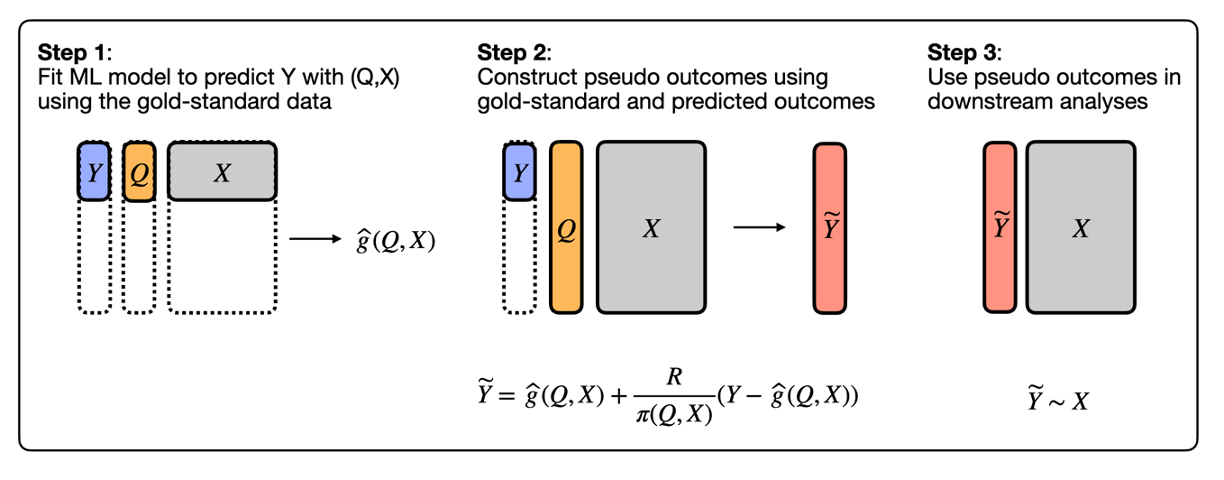

For example,

The core idea is as follows: Human annotators provide gold-standard labels (\(Y\)) to a small subset of documents. Then, LLMs are used to predict synthetic (surrogate) labels \(Q\) for the entire dataset. The researchers assume that some explanatory variables (\(X\)) can be used to predict \(Y\), for example when examining whether social media posts are annotated as containing hate speech,explanatory variables might include the poster’s gender, education level, political affiliation etc. To construct an unbiased estimator, a machine learning model \(\hat{g}(Q,X)\) is fitted to predict \(Y\). In the second step, pseudo-labels are computed using a specific equation:

\[\tilde{Y} = \hat{g}(Q, X)+\frac{R}{\pi(Q, X)}(Y-\hat{g}(Q,X))\]where \(R\) is an indicator variable denoting whether an example has a gold-standard label (\(R=1\)) or not (\(R=0\)), and \(\pi(Q, X)\) is the probability of an example having a gold-standard label (e.g, if the experts annotated the documents i.i.d., \(\pi\) would be one divided by the total number of documents). The term on the right side of the equation, \(\frac{R}{\pi(Q, X)}(Y-\hat{g}(Q,X))\), can be seen as a bias-correction term. By the end of this step, we have large-scale corrected labels (\(\tilde{Y}\)), automatically generated and free from the biases of the original LLMs. With this unbiased dataset, the NLP scientist can proceed to the third step: their intended downstream analysis, in a scientifically sound manner.

Interdisciplinary Collaboration

Finally, for NLP to empower research, at least two experts are always required – one on the NLP side, and one on the scientific front. Without consulting with domain experts, the NLP Scientist can find themselves investing in efforts that – despite interesting – may not drive true impact. To portray this, let’s consider the task of NLP Dementia Detection (approached by dozens of researchers

In consultation with a domain expert – namely, a highly respected professor who leads a research center on Alzheimer’s – we asked “how NLP might help advance research on dementia detection”. Their response was straightforward:

"If the data used for training is collected from individuals already diagnosed with noticeable dementia, it wouldn’t be particularly helpful or impactful. When someone has already been diagnosed, the signs are usually quite detectable, so a predictive model wouldn’t add much value. The real challenge lies in identifying subtle, early signals that go unnoticed – in other words, detecting the onset of cognitive decline during the Mild Cognitive Impairment (MCI) phase. That’s where you should focus."

No one could have provided this invaluable advice apart from a domain expert. This insight prompts reflection on the hundreds of existing studies on dementia detection

On the other side of the equation, we should consider what scientific domain experts can gain from collaborating closely with NLP scientists. As NLP (and LLMs in particular) become increasingly prevalent as research tools, NLP scientists have a vital responsibility to guide their counterparts on the strengths–and limitations–of their methods. An interesting example is provided by

NLP scientists should always remember the unique expertise they bring to the table- they’re the specialists who understand the strengths of NLP, designing technical solutions, running experiments, and interpreting results. However, in “NLP for science”, close collaboration with domain experts is essential for success. These scientific counterparts contribute invaluable insights – whether in shaping the initial problem definition, guiding data collection and annotation, or gauging the broader impact of results. By working together from the outset, NLP scientists and domain experts can ensure that the research is not only technically robust but also genuinely meaningful and impactful.

Summary

With this blog post, we aim to inspire NLP experts to leverage their expertise and push the boundaries of human-centered sciences. We highlighted NLP’s unique capabilities across fields like neuroscience, psychology, behavioral economics, and beyond, illustrating how it can generate hypotheses, validate theories, perform simulations, and more. The time is ripe for NLP to drive scientific insights and transcend predictions alone, positioning language as a lens into human cognition and collective behavior. Moving forward, interdisciplinary collaboration, scientific rigor, and a commitment to meaningful research will be essential to make NLP a cornerstone of human-centered scientific discovery.